Pranav—Fasionable dergeulation

When the last year was ending, Bhuvan and I were talking about what we thought the mega-trends of 2025 shall be.

I thought Javier Milei’s deregulation plank would become more popular globally, perhaps turning into a political trend. Musk/Ramaswamy (lol) had started whipping up DOGE. American political rhetoric, I imagined, would soon infect the rest of the world, sparking a wave of copycat attempts everywhere.

Bhuvan disagreed. Reasonably so. DOGE, at least, looked like a gimmick, and with Musk in sole charge of the organisation, I don’t see it doing very much. Musk is great at bringing wild engineering ideas to fruition, but he’s not the best manager of people. And the job of government revolves entirely around managing people.

Even so, twenty-five days into the year, there’s more evidence that these ideas are catching on. What’s more curious is that this interest comes from the most unexpected of places—Vietnam and the EU.

Here’s an Asian, communist state announcing that it’s considering Millei-esque measures.

Here’s Europe, the bastion of welfarism, announcing a regulatory simplification plan.

Here's France considering a massive regulatory ‘pause’.

That these announcements all come from unexpected quarters gives me hope: they're less likely to be a quick grab for political brownie points. From what I can see, these aren't copycat events at all; they're more likely to be a technocratic project to remove restraints on economic growth.

That's a good thing. The DOGE/Milei branch of this idea seems married to the idea of showing down some ultra-woke cabal of shadow-elites that hate civilisation. That's unlikely to happen. The actual work of deregulation is unsexy at best and, at worst, could cut programs that help the very people cheering these on. Once people come to understand that, the political wave for deregulation could very well fizzle out.

Bhuvan—The AI wars

It feels like if you blink, you might miss a month's worth of progress in the dizzyingly fast world of AI. The pace of progress is ridiculous, to say the least. My default frame of thinking about AI is to assume that massive society-wide disruption is all but guaranteed. With each passing week, that seems increasingly likely.

Let me put it this way: if progress on AI were to flat-line tomorrow—which is improbable—and if the AI tech as it stands today were to be widely adopted, millions of entry-level jobs would be eliminated overnight. I'm also ambivalent about superlative framings like "superintelligence" because they seem like vacuous, utterly meaningless terms.

At this point, even if one were to naively extrapolate the current rates of progress on AI, that old Chinese curse "May you live in interesting times" will come true in ways that will make us rue our existence.

Here's an incomplete list of recent developments.

You're about to be fired. Say hi to your replacement

OpenAI launched Operator, an automatic agent that can browse on your behalf and automate repetitive tasks like booking tickets, ordering groceries, etc. Anthropic had previewed something similar earlier.

What does this mean?

Imagine this: In another year, you come to the office, wear your shiny headphones, and just sit there speaking into the microphone. As you do this, OpenAI's Operator takes over your computer and does all your work better than you ever could or dreamt. The only issue with this vision is that you're dreaming because you have already been fired and Operator has replaced you. Sorry.

Of course, Operator as it stands today is half-baked and more or less useless. Casey Newton, who writes the awesome newsletter Platformer, took it for a spin and was less than impressed.

One issue seems to be that several sites block OpenAI's crawlers, and it remains to be seen how this will play out:

The downside is that many sites like Reddit already block AI agents from browsing so they can't be accessed by Operator. In this research preview mode, Operator is also blocked by OpenAI from accessing certain resource-intensive sites like Figma or competitor-owned sites like YouTube for performance or legal reasons.

While it may seem underwhelming, the question on my mind is how much better we can expect Operator and similar tools, which seem to be powered by LLMs, to become. If I've understood the recent commentary correctly, LLMs seem to have hit scaling bottlenecks—i.e., shoving increasing amounts of data and processing power is leading to diminishing returns.

Table stakes

This week, a Chinese hedge fund launched DeepSeek-R1, an open-source model that claims to rival OpenAI's O1, the premier reasoning model. Reasoning models supposedly "think" before they answer a query. The most interesting aspect of DeepSeek is that it's open source. So far, the response from users about DeepSeek's capabilities has been pretty positive, and that's been my experience with it as well.

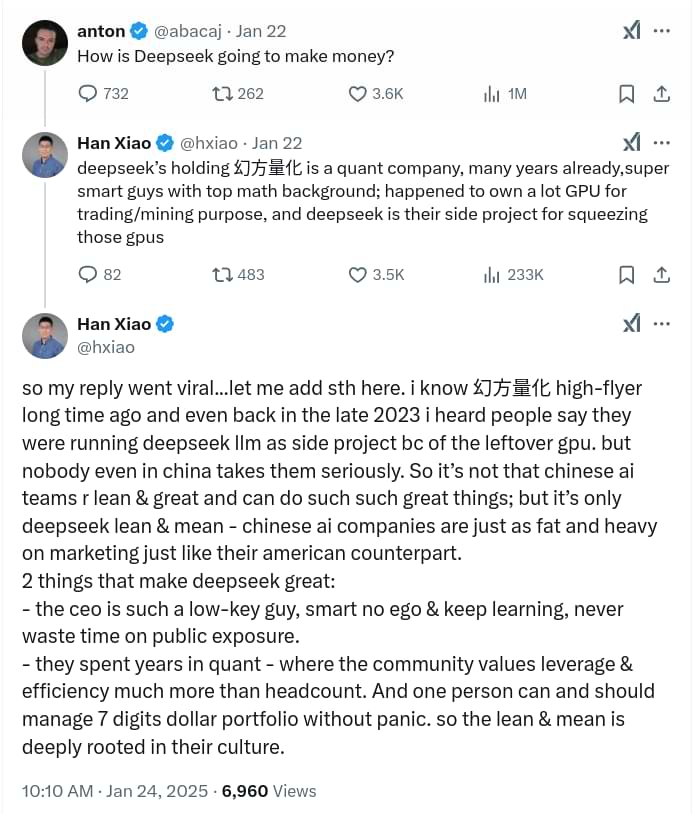

The launch of DeepSeek led to some interesting conversations on Twitter (I refuse to call it X). Before I get to that, here's an interesting tidbit about DeepSeek: it's apparently a side project for the hedge fund team.

Joe Wisenthal of Bloomberg asked the right question about what it all means:

I don't have some grand takeaway here yet, about what all this means for the business of AI or US-China tech competition or anything like that. But for the moment I'll just note, at least for now, switching costs (when it comes to these chatbots) are incredibly low, or non-existent. And also there seems to be this weird incongruity where we're talking about the need for hundreds of billions of dollars for AI infrastructure investment at a time when it appears (at least in theory) that you can get somewhat close to state-of-the-art on a low budget, with services delivered cheaply.

And keep in mind, Chinese technologists are launching models that rival those of US companies despite all the restrictions on advanced chips required to train these models. The model also seems to have been trained at a much lower cost compared to US models:

R1 is part of a boom in Chinese large language models (LLMs). Spun out of a hedge fund, DeepSeek emerged from relative obscurity last month when it released a chatbot called V3, which outperformed major rivals, despite being built on a shoestring budget. Experts estimate that it cost around $6 million to rent the hardware needed to train the model, compared with upwards of $60 million for Meta's Llama 3.1 405B, which used 11 times the computing resources.

Having limited computing power drove the firm to "innovate algorithmically", says Wenda Li, an AI researcher at the University of Edinburgh, UK. During reinforcement learning, the team estimated the model's progress at each stage, rather than evaluating it using a separate network. This helped to reduce training and running costs, says Mateja Jamnik, a computer scientist at the University of Cambridge, UK. The researchers also used a 'mixture-of-experts' architecture, which allows the model to activate only the parts of itself that are relevant for each task.

Pranav had written about the latest chip restrictions designed to kneecap China here.

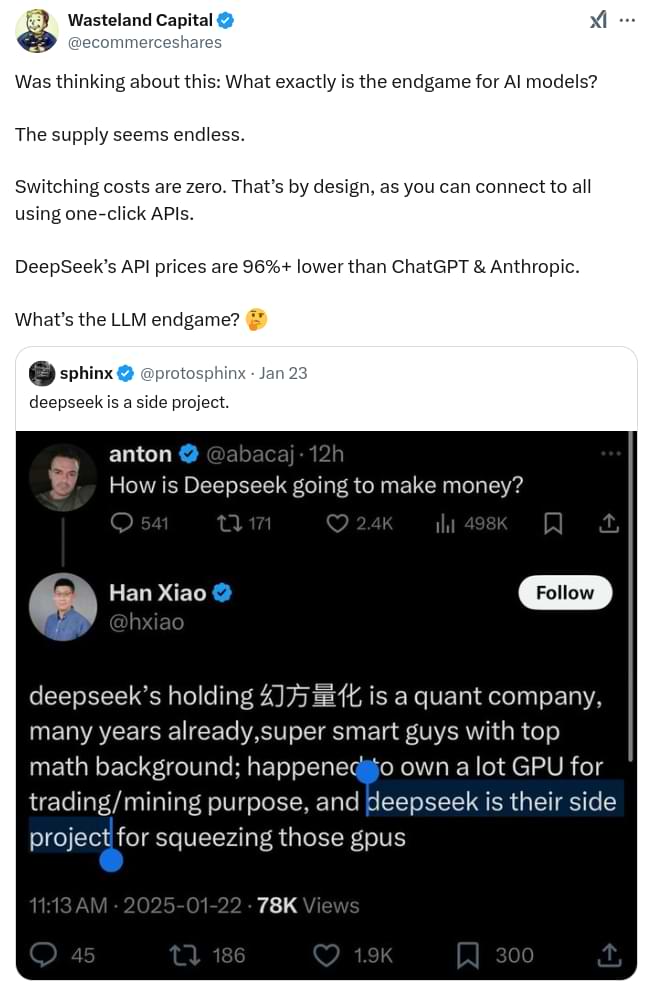

A Twitter user by the name of Wasteland Capital posed the perfect question: if there are no moats with just LLMs, what's the endgame for AI companies like OpenAI and Anthropic? What happens to the billions that these companies have burned and investors have sunk?

Anurag—The popularity of Reddit

I recently read up on why so many Google searches end up showing Reddit results right at the top. Collected a few thoughts I thought of sharing:

Why Reddit works:

- Reddit’s subreddits are full of passionate & like-minded people. It makes it a great space to test ideas, gather feedback, or even find early customers in some cases.

- People inherently trust Reddit more than ads, blogs, or influencers. It’s raw, honest, and community-driven.

- Google ranks Reddit threads higher because they’re authentic and rich in detail. As a habit, many people have now started to add “Reddit” to their searches (e.g., “best laptops Reddit”) to find non-sponsored & genuine answers.

- Reddit takes upvotes and downvotes much more seriously than any other platform does. Upvotes and downvotes keep spam and bad advice in check. Thus, the content feels genuine and helpful, unlike sponsored posts elsewhere.

What’s inherently driving it:

- People are tired of ads and paid promotions.

- They’re looking for real, unfiltered opinions and answers.

- People absolutely hate fake and sales-y posts.

And as a result, AI companies are going crazy for the platform's data. Given that all of us, at some point, have nudged ChatGPT to behave more like a human, Reddit is a goldmine for AI companies. If they can get access to raw, unfiltered conversations from real humans, AI learning can practically leapfrog.

So, it's kinda funny how a platform that's built on being anti-fake is actually the one feeding into building AI systems of the future.

That's it for today. If you liked this, give us a shout by tagging us on Twitter.